We will be performing routine maintenance on Tuesday, Jan. (ET), through Wednesday, Jan. During this time, you may experience intermittent issues when accessing your ATI account.

Introduction

We've been waiting for the ATi Radeon 8500 to appear for quite some time. NVIDIA have had this class of graphics card to itself since the GeForce3 was released. 3dfx are no more and Matrox don't make gaming cards now so NVIDIA have had the performance gaming sector to themselves.

ATi announced the R8500 to the world on the 14th of August to much fanfare. It was to ship at 250Mhz and cost a recommended $399. At the time, ATi were making bold claims for their new hardware.

'The RADEON 8500 clearly positions ATI as the technology and performance leader. Enthusiasts will find an unequalled visual experience in the RADEON 8500,' said Rick Bergman, Senior Vice President & General Manager, Desktop Marketing, ATI Technologies Inc. 'With the RADEON 8500 GPU (graphics processing unit), the industry moves a quantum step forward, towards full visual reality on the PC and Macintosh platforms.'

Grand claims indeed for their new baby. Fast forward to 9th October, a little later than the September launch date and ATi announce that cards are ready. By this time we knew that core clock had increased to 275Mhz giving parity with the memory clock and that price had dropped a whopping $100 to $299. The card was Windows XP ready complete with XP drivers and would ship on the 25th of that month.

Here I am a month later with the review and what a month it's been. We've had the card for a little while now in the labs and for a little while we held off on a review because of one thing, the drivers. A lot had been said recently in the technology press about ATi's drivers. Developers like John Carmack and Derek Smart have given their views on ATi's drivers, driver writers and some of the things the driver does to improve performance in certain applications like Quake 3 and 3DMark 2001.

For a little while with us, the drivers on our testing platform, the one ATi made much fanfare about on October the 9th, Windows XP weren't in that good shape. We stuggled with performance and while we have no qualms about giving a bad review where a bad review is deserved, we had faith in the hardware. The R8500 is a very nice piece of technology and like all commodity PC hardware, needs drivers for it to function properly.

On November 14th ATi released a driver we were happy to use in testing and base a review around. So while our review is a little later than some others, it's a review we are happy to release. We'll ignore the furore around the way the driver achieves performance in some applications. Everyone has their views, we have ours but were not here to disect all that. We hope you just want to know how the card performs, what the visual quality is like, does it run your games well etc.

The Technology

So with all that out the way, lets take a look at what makes the tech behind the 8500. For a start it's the first ATi graphics processor to be manufactured on a 0.15 micron process. Previous Radeons were all 0.18 micron parts so the new R200 core runs faster and cooler. Packing in around 60 million transistors, 3 million more than the GeForce 3 which itself is more complex than the Pentium 4 processor in terms of transistor count and we have a hefty piece of silicon.

ATi have packed onto that wafer an array of technologies they hope will give it the performance to beat the GeForce 3. First off we have the Charisma Engine II. Following on from the original found on the Radeon, the Charisma Engine is the name ATi has given to the Transform and Lighting (T&L) engine.

The T&L engine works in tandem with their SMARTSHADER tech which provides the vertex and pixel shader capability on the card and allows it to implement the DirectX 8.1 specification in full. OpenGL 1.3 is also supported.

Pixel Tapestry II is the R200's rendering engine, the heart of the new chip. It gives us 4 rendering pipelines each capable of processing 6 textures in a single rendering pass. This gives the R200 2.2GTexels/sec fillrate and with a 128-bit DDR interface to 64Mb of memory at 275Mhz it has access to 8.8Gb/sec of memory bandwidth eclipsing the 7.36Gb/sec provided by the GeForce3.

To help optimise that memory bandwidth we have HyperZ II. HyperZ first appeared on the original Radeon. HyperZ II gives an effective 25% increase in memory bandwidth by being able to clear the Z buffer very quickly, using compression of the Z buffer on the fly and by being able to discard pixels before rendering.

Added to all this is SMOOTHVISION, ATi's method of full scene anti-aliasing and effects like motion blur and depth of field. Then we have TRUFORM that smooths out the edges of models in 3D games and applications. It requires developer support to implement.

Finally we have HYDRAVISION, ATi's dual head implementation like NVIDIA's TwinView and the original consumer implementation shown to us by Matrox. It does dual DVI, combinations of panel, CRT and TV and supports UXGA panels at up to 1600x1200 resolution.

Card Installation and Driver

I wont hide the fact that I've never had as much trouble making a graphics card work. It took me 6hrs in total to get the card working and I gave up once. The operating system had seen a GeForce3 and a pair of Ti500's in the period until I installed the R8500. I'm not sure if the previous cards were to blame or whether ATi's driver install routine isn't the best but uninstalling the 22.40 Detonators using the Windows XP control panel and running the ATi driver installation program finally worked. During the time that it didn't work, the explorer shell would hang, dialup networking was completely unusable (I could connect but the connection would die after a few seconds and the modem wouldn't hang up etc)

However I finally got the Windows XP Display Driver build 6.13.10.3286 installed and the card was ready for use. Here are a couple of screenshots of the driver property pages for the D3D and OpenGL parts of the driver settings.

As you can see, the driver provides adjustment for the common adjustments for Direct3D and OpenGL such as level of detail and vsync.

So after the initial installation hiccups, everything has been extremely smooth. I haven't experienced a single driver crash throughout testing.

Card Features

Before we move onto performance, I'll quickly go over some of the features of the card and how they performed in real world terms. First of all, HydraVision. I was able to use my TV as a second display for watching DVD's whilst still doing work. I was also able to hook up a 2nd monitor via the supplied DVI-to-DSub adapter although that wasn't tested for long. You can set the refresh rate, colour depth and resolution of the heads independently and everything seemed to work fine. Again however, extensive testing wasn't done on this feature.

DVD playback was fine however being a review sample, I didn't have the full ATi CD that ships with the Radeon and so wasn't able to install ATi's supposedly excellent DVD player software. WinDVD however worked fine and I think it supports the hardware iDCT on the Radeon series of cards. Performance was smooth however I can't confirm if the iDCT hardware acceleration was actually being used.

Performance

Test System:

American Tactical is a worldwide importer of high quality firearms, ammunition and tactical equipment. Exclusively representing superior manufacturers, ATI is recognized as an established, reputable source for domestic and international products. The AMD Radeon HD 6550M (or ATI Mobility Radeon HD 6550) is a renamed version of the ATI Mobility Radeon HD 5650. As the 5650, the HD6550M supports DirectX 11. In our first episode of TGP Extras we review an affordable microphone for low budget filmmakers!The Mic: Submission Form.

For benchmarking Quake3 we used Q3Bench with the following settings.

Main tab

Custom config tab

So for benching Q3 we used resolutions above 1024x768 in 32-bit colour and 32-bit textures with all quality rendering options on to really stress the card.

For benchmarking Unreal Tournament we used the same resolutions and the Thunder demo from Reverend. Direct3D render options in the preferences dialog were set to defaults.

For 3DMark we did a default bench with everything as standard. No driver tweaks were used. V-Sync was off for all 3 tests.

The 3 tests give us a broad look at the card performance using 2 DirectX benchmarks and an OpenGL benchmark. 3DMark also tests DirectX 8 features like pixel and vertex shaders. While no two applications are the same and it's not wise to generalise performance using such a small cross section of applications the 3 applications do comprise the 'standard' applications used in most reviews so we use them.

Performance Results

Card clocks for these results were the standard, out of the box card clocks of 275(550)Mhz memory and 275Mhz core.

Here we can the performance of the card tail off as the resolution of the card is pushed. The fillrate of the card is increasingly needed as we increase resolution. Performance on the P4 is exceptional. The average framerate, even at 1600x1200 with all options on is 122fps which make it very playable even at such high resolution. Top marks to the card in Quake3.

Next up we have Unreal Tournament. It's the retail Game Of The Year edition and it's version 436 out of the box so it doesn't require patching. Just install and go. Unreal Tournament is more affected by CPU speed than graphics card speed but you can still see the effect increasing the resolution has on the card.

UT is CPU limited so the dropoff in framerate isn't dramatic. UT spends a lot of time on lighting and model calculations so a fast CPU is essential for decent UT gaming at high res. The P4 and Radeon don't disappoint.

Next up we have 3DMark 2001 Professional. This benchmark is all about the card. Card clocks and driver tweaks have a large effect on 3DMark scores. The following result is from the default bench, no driver tweaks and stock card clocks.

That's an impressive score given the platform and it's the highest default bench score I've yet seen without any kind of tweaking. That's a straight out of the box score and very impressive. It's a good 700 points clear of a GF3 running on the same system.

Overclocking

From what I've seen and read, the R8500 cards are responding well to overclocking. While overclocking and testing on the card has been limited I was able to push the card to 312/313 for some tests and benchmarks. The highest stable overclock that ran all the games and test without and artifacts and without using special cooling was 305/305. Here's a 3DMark result @ 305/305.

We're seeing a 3% increase in 3DMark for a 10% increase in clocks, both core and memory. Not too shabby and of course 3DMark responds better in some tests where the T&L unit is being exercised like Nature and the core clock speed is important and better in other like Dragothic where memory performance is perhaps more important.

We see similar 2 to 5% increases in Quake3 by upping the clocks to 305/305 so increased performance is quite consistent. I feel the Radeon responds well to overclocking, just like the GeForce3 series it competes against.

Conclusion

I'd love to give the Radeon an Editors Choice Award I really would. I love the hardware, it's a nice design, support for DX8.1 is good etc. But the drivers aren't what I'd call production usable. For instance, during the writing of the last half of this review, following a reboot to install UniTuner, I'm suddenly left with only 60Hz as a refresh rate.

While I can apparently set other refresh rates with success, with both the windows display control panel, Powerstrip and UniTuner all reporting success in setting different refresh rates, my eyes and monitor both tell me otherwise.

Accessing the OSD for my Sony G400 tell me that 60Hz is what's being used to drive it, despite various software supposedly setting other refresh rates. I can only conclude this is a driver issue. Reinstalling the driver hasn't helped and I shouldn't have to delve into the registry manually to fix things. Refresh rate switching is a standard driver function and should just work, no questions.

However, with the new drivers the card performs very well. I played Quake3, UT, Colin McRae 2, Serious Sam, Max Payne and also some Championship Manager 00/01 during the course of having the card and also a very brief shot of Half Life: Opposing Force, all without any problems, performance issues or serious rendering issues. In that respect, I award the card points.

Hopefully the drivers continue to get better as time goes on, it's just unfortunate they weren't better than this out of the box. For those willing to persevere however, not that you should have to, it's a fine card, very good performer and recommended if you like your image quality sharp and your DVD playback smooth.

Just short of an Editors Choice Award on account of the driver issues and problems installing and testing the card. Finally, while I would have liked to have gone over SMOOTHVISION in more detail we just didn't have time. I'm happy to direct you to this article at AnandTech that goes into excellent detail on SMOOTVISION and AA in general. While not in house Hexus material, it's an excellent read and we're happy to recommend it!

With the Radeon HD 5670 and HD 5450, ATI has moved its focus to the budget graphics card segment. This move hasn't been the best for ATI though, because as we saw with the HD 5670, it is the price-point that takes center-stage rather than the performance. As a result, at its current price, the HD 5450 is somewhat of a disappointment.

Ati 6550 M Review Youtube

The HD 5450 is available with both 512MB and 1GB of video memory. It has a core that is clocked at 650MHz and a memory clock speed of 800MHz. Although it uses DDR3 RAM, its memory bandwidth has been limited to 64-bit, which is below par. Like the other 5000 series cards from ATI, the HD 5450 supports DirectX 11 and ATI's Eyefinity technology (that allows one card to output to multiple displays).

Introduction

We've been waiting for the ATi Radeon 8500 to appear for quite some time. NVIDIA have had this class of graphics card to itself since the GeForce3 was released. 3dfx are no more and Matrox don't make gaming cards now so NVIDIA have had the performance gaming sector to themselves.

ATi announced the R8500 to the world on the 14th of August to much fanfare. It was to ship at 250Mhz and cost a recommended $399. At the time, ATi were making bold claims for their new hardware.

'The RADEON 8500 clearly positions ATI as the technology and performance leader. Enthusiasts will find an unequalled visual experience in the RADEON 8500,' said Rick Bergman, Senior Vice President & General Manager, Desktop Marketing, ATI Technologies Inc. 'With the RADEON 8500 GPU (graphics processing unit), the industry moves a quantum step forward, towards full visual reality on the PC and Macintosh platforms.'

Grand claims indeed for their new baby. Fast forward to 9th October, a little later than the September launch date and ATi announce that cards are ready. By this time we knew that core clock had increased to 275Mhz giving parity with the memory clock and that price had dropped a whopping $100 to $299. The card was Windows XP ready complete with XP drivers and would ship on the 25th of that month.

Here I am a month later with the review and what a month it's been. We've had the card for a little while now in the labs and for a little while we held off on a review because of one thing, the drivers. A lot had been said recently in the technology press about ATi's drivers. Developers like John Carmack and Derek Smart have given their views on ATi's drivers, driver writers and some of the things the driver does to improve performance in certain applications like Quake 3 and 3DMark 2001.

For a little while with us, the drivers on our testing platform, the one ATi made much fanfare about on October the 9th, Windows XP weren't in that good shape. We stuggled with performance and while we have no qualms about giving a bad review where a bad review is deserved, we had faith in the hardware. The R8500 is a very nice piece of technology and like all commodity PC hardware, needs drivers for it to function properly.

On November 14th ATi released a driver we were happy to use in testing and base a review around. So while our review is a little later than some others, it's a review we are happy to release. We'll ignore the furore around the way the driver achieves performance in some applications. Everyone has their views, we have ours but were not here to disect all that. We hope you just want to know how the card performs, what the visual quality is like, does it run your games well etc.

The Technology

So with all that out the way, lets take a look at what makes the tech behind the 8500. For a start it's the first ATi graphics processor to be manufactured on a 0.15 micron process. Previous Radeons were all 0.18 micron parts so the new R200 core runs faster and cooler. Packing in around 60 million transistors, 3 million more than the GeForce 3 which itself is more complex than the Pentium 4 processor in terms of transistor count and we have a hefty piece of silicon.

ATi have packed onto that wafer an array of technologies they hope will give it the performance to beat the GeForce 3. First off we have the Charisma Engine II. Following on from the original found on the Radeon, the Charisma Engine is the name ATi has given to the Transform and Lighting (T&L) engine.

The T&L engine works in tandem with their SMARTSHADER tech which provides the vertex and pixel shader capability on the card and allows it to implement the DirectX 8.1 specification in full. OpenGL 1.3 is also supported.

Pixel Tapestry II is the R200's rendering engine, the heart of the new chip. It gives us 4 rendering pipelines each capable of processing 6 textures in a single rendering pass. This gives the R200 2.2GTexels/sec fillrate and with a 128-bit DDR interface to 64Mb of memory at 275Mhz it has access to 8.8Gb/sec of memory bandwidth eclipsing the 7.36Gb/sec provided by the GeForce3.

To help optimise that memory bandwidth we have HyperZ II. HyperZ first appeared on the original Radeon. HyperZ II gives an effective 25% increase in memory bandwidth by being able to clear the Z buffer very quickly, using compression of the Z buffer on the fly and by being able to discard pixels before rendering.

Added to all this is SMOOTHVISION, ATi's method of full scene anti-aliasing and effects like motion blur and depth of field. Then we have TRUFORM that smooths out the edges of models in 3D games and applications. It requires developer support to implement.

Finally we have HYDRAVISION, ATi's dual head implementation like NVIDIA's TwinView and the original consumer implementation shown to us by Matrox. It does dual DVI, combinations of panel, CRT and TV and supports UXGA panels at up to 1600x1200 resolution.

Card Installation and Driver

I wont hide the fact that I've never had as much trouble making a graphics card work. It took me 6hrs in total to get the card working and I gave up once. The operating system had seen a GeForce3 and a pair of Ti500's in the period until I installed the R8500. I'm not sure if the previous cards were to blame or whether ATi's driver install routine isn't the best but uninstalling the 22.40 Detonators using the Windows XP control panel and running the ATi driver installation program finally worked. During the time that it didn't work, the explorer shell would hang, dialup networking was completely unusable (I could connect but the connection would die after a few seconds and the modem wouldn't hang up etc)

However I finally got the Windows XP Display Driver build 6.13.10.3286 installed and the card was ready for use. Here are a couple of screenshots of the driver property pages for the D3D and OpenGL parts of the driver settings.

As you can see, the driver provides adjustment for the common adjustments for Direct3D and OpenGL such as level of detail and vsync.

So after the initial installation hiccups, everything has been extremely smooth. I haven't experienced a single driver crash throughout testing.

Card Features

Before we move onto performance, I'll quickly go over some of the features of the card and how they performed in real world terms. First of all, HydraVision. I was able to use my TV as a second display for watching DVD's whilst still doing work. I was also able to hook up a 2nd monitor via the supplied DVI-to-DSub adapter although that wasn't tested for long. You can set the refresh rate, colour depth and resolution of the heads independently and everything seemed to work fine. Again however, extensive testing wasn't done on this feature.

DVD playback was fine however being a review sample, I didn't have the full ATi CD that ships with the Radeon and so wasn't able to install ATi's supposedly excellent DVD player software. WinDVD however worked fine and I think it supports the hardware iDCT on the Radeon series of cards. Performance was smooth however I can't confirm if the iDCT hardware acceleration was actually being used.

Performance

Test System:

American Tactical is a worldwide importer of high quality firearms, ammunition and tactical equipment. Exclusively representing superior manufacturers, ATI is recognized as an established, reputable source for domestic and international products. The AMD Radeon HD 6550M (or ATI Mobility Radeon HD 6550) is a renamed version of the ATI Mobility Radeon HD 5650. As the 5650, the HD6550M supports DirectX 11. In our first episode of TGP Extras we review an affordable microphone for low budget filmmakers!The Mic: Submission Form.

For benchmarking Quake3 we used Q3Bench with the following settings.

Main tab

Custom config tab

So for benching Q3 we used resolutions above 1024x768 in 32-bit colour and 32-bit textures with all quality rendering options on to really stress the card.

For benchmarking Unreal Tournament we used the same resolutions and the Thunder demo from Reverend. Direct3D render options in the preferences dialog were set to defaults.

For 3DMark we did a default bench with everything as standard. No driver tweaks were used. V-Sync was off for all 3 tests.

The 3 tests give us a broad look at the card performance using 2 DirectX benchmarks and an OpenGL benchmark. 3DMark also tests DirectX 8 features like pixel and vertex shaders. While no two applications are the same and it's not wise to generalise performance using such a small cross section of applications the 3 applications do comprise the 'standard' applications used in most reviews so we use them.

Performance Results

Card clocks for these results were the standard, out of the box card clocks of 275(550)Mhz memory and 275Mhz core.

Here we can the performance of the card tail off as the resolution of the card is pushed. The fillrate of the card is increasingly needed as we increase resolution. Performance on the P4 is exceptional. The average framerate, even at 1600x1200 with all options on is 122fps which make it very playable even at such high resolution. Top marks to the card in Quake3.

Next up we have Unreal Tournament. It's the retail Game Of The Year edition and it's version 436 out of the box so it doesn't require patching. Just install and go. Unreal Tournament is more affected by CPU speed than graphics card speed but you can still see the effect increasing the resolution has on the card.

UT is CPU limited so the dropoff in framerate isn't dramatic. UT spends a lot of time on lighting and model calculations so a fast CPU is essential for decent UT gaming at high res. The P4 and Radeon don't disappoint.

Next up we have 3DMark 2001 Professional. This benchmark is all about the card. Card clocks and driver tweaks have a large effect on 3DMark scores. The following result is from the default bench, no driver tweaks and stock card clocks.

That's an impressive score given the platform and it's the highest default bench score I've yet seen without any kind of tweaking. That's a straight out of the box score and very impressive. It's a good 700 points clear of a GF3 running on the same system.

We can see why NVIDIA released the Ti500 to compete with the Radeon 8500 since with the right drivers its a very impressive performer. Remember, all these benchmarks were done on Windows XP Professional.

Overclocking

From what I've seen and read, the R8500 cards are responding well to overclocking. While overclocking and testing on the card has been limited I was able to push the card to 312/313 for some tests and benchmarks. The highest stable overclock that ran all the games and test without and artifacts and without using special cooling was 305/305. Here's a 3DMark result @ 305/305.

We're seeing a 3% increase in 3DMark for a 10% increase in clocks, both core and memory. Not too shabby and of course 3DMark responds better in some tests where the T&L unit is being exercised like Nature and the core clock speed is important and better in other like Dragothic where memory performance is perhaps more important.

We see similar 2 to 5% increases in Quake3 by upping the clocks to 305/305 so increased performance is quite consistent. I feel the Radeon responds well to overclocking, just like the GeForce3 series it competes against.

Conclusion

I'd love to give the Radeon an Editors Choice Award I really would. I love the hardware, it's a nice design, support for DX8.1 is good etc. But the drivers aren't what I'd call production usable. For instance, during the writing of the last half of this review, following a reboot to install UniTuner, I'm suddenly left with only 60Hz as a refresh rate.

While I can apparently set other refresh rates with success, with both the windows display control panel, Powerstrip and UniTuner all reporting success in setting different refresh rates, my eyes and monitor both tell me otherwise.

Accessing the OSD for my Sony G400 tell me that 60Hz is what's being used to drive it, despite various software supposedly setting other refresh rates. I can only conclude this is a driver issue. Reinstalling the driver hasn't helped and I shouldn't have to delve into the registry manually to fix things. Refresh rate switching is a standard driver function and should just work, no questions.

However, with the new drivers the card performs very well. I played Quake3, UT, Colin McRae 2, Serious Sam, Max Payne and also some Championship Manager 00/01 during the course of having the card and also a very brief shot of Half Life: Opposing Force, all without any problems, performance issues or serious rendering issues. In that respect, I award the card points.

Hopefully the drivers continue to get better as time goes on, it's just unfortunate they weren't better than this out of the box. For those willing to persevere however, not that you should have to, it's a fine card, very good performer and recommended if you like your image quality sharp and your DVD playback smooth.

Just short of an Editors Choice Award on account of the driver issues and problems installing and testing the card. Finally, while I would have liked to have gone over SMOOTHVISION in more detail we just didn't have time. I'm happy to direct you to this article at AnandTech that goes into excellent detail on SMOOTVISION and AA in general. While not in house Hexus material, it's an excellent read and we're happy to recommend it!

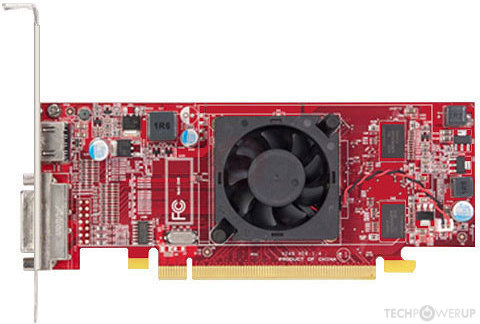

With the Radeon HD 5670 and HD 5450, ATI has moved its focus to the budget graphics card segment. This move hasn't been the best for ATI though, because as we saw with the HD 5670, it is the price-point that takes center-stage rather than the performance. As a result, at its current price, the HD 5450 is somewhat of a disappointment.

Ati 6550 M Review Youtube

The HD 5450 is available with both 512MB and 1GB of video memory. It has a core that is clocked at 650MHz and a memory clock speed of 800MHz. Although it uses DDR3 RAM, its memory bandwidth has been limited to 64-bit, which is below par. Like the other 5000 series cards from ATI, the HD 5450 supports DirectX 11 and ATI's Eyefinity technology (that allows one card to output to multiple displays).

We got a reference card from ATI, so the design is sure to change in the hands of different manufacturers. The reference card had a small fan embedded in a heatsink over the core and three ports- VGA, HDMI and DVI. The card is slim and small and will fit into a regular cabinet without any issues. The card also does not require any additional power so there is no input port.

The card also has low power requirements -- 6.4 watts at idle and about 19 watts during regular usage. Owing to its small size, it runs silently and has no temperature issues. At full load in an open setup, the core temperature went up to 47 degrees while at idle it dropped to 35 degrees. All in all, the HD 5450 is perfectly designed to fit into an HTPC.

Ati 6550 M Review

The stand-out feature of the HD 5450 is that it is the most inexpensive DirectX 11 card in the market. Unfortunately, that does not mean its performance is noteworthy. Overall, it is a card with one of the lowest performance scores that we've reviewed. In fact, its scores even fall short of the HD 4550.

Ati 6550 M Reviewed

Performance-wise, among ATI cards, we would put it between the HD 4350 and the HD 4550. In 3D Mark 2006, the HD 5450 returned a score of 3792. In 3D Mark Vantage's Entry preset, the score was 6916. The gaming benchmarks proved that the Radeon should not be considered if you want to play the latest games; even at low resolutions and settings. We tested the card using Crysis, Far Cry 2 and Tom Clancy's H.A.W.X and in all cases (except one) the frame-rates were unable to cross 20fps.

The ATI Radeon HD 5450 (1GB) is a fair product if you're looking for a card for your home theatre PC and a good one if you are especially concerned about DirectX 11. However, there are also other cards that we would recommend over it, including the HD 4550 and Nvidia's Geforce GT120. On the plus side, it's currently the cheapest way to get DX11 at your fingertips.